After this lesson, students should

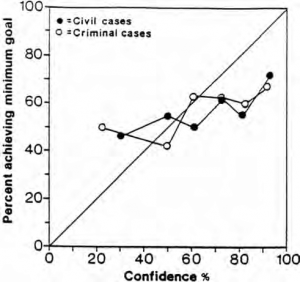

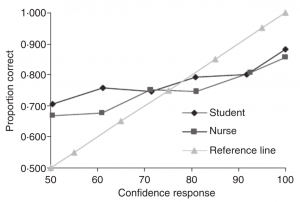

- Be wary of high levels of confidence.

- Understand the different ways in which scientists in different fields discuss credence levels (e.g. 95% confidence interval, error bars).

- Appreciate that one can improve on the calibration of their credence levels, and one should strive to reach an accurate calibration.