|

|

| Line 86: |

Line 86: |

|

| |

|

| </tabber> | | </tabber> |

| <restricted>

| |

|

| |

|

| == Useful Resources ==

| | {{#restricted:{{3.2 Calibration of Credence Levels}}}}{{NavCard|prev=3.1 Probabilistic Reasoning|next=4.1 Signal and Noise}} |

| | |

| <tabber>

| |

| | |

| |-|Lecture Video=

| |

| | |

| <br /><center><youtube>cKFOQcTVps4</youtube></center><br />

| |

| | |

| |-|Discussion Slides=

| |

| | |

| {{LinkCard | |

| |url=https://docs.google.com/presentation/d/1d4kCpqDxyiL8IhzqQEB4sY9FziyOkzrTuvvnBPsSlwo/

| |

| |title=Discussion Slides Template

| |

| |description=The discussion slides for this lesson.

| |

| }}

| |

| <br />

| |

| | |

| |-|Handouts and Activities=

| |

| | |

| {{LinkCard

| |

| |url=https://docs.google.com/forms/d/19tP7vDrHZkGgc57xPg191OWZVFRMaWU_n9CRLAtyoJA/

| |

| |title=Future Predictions Google Form Template

| |

| |description=A Google form for you to copy for the future predictions activity.}}

| |

| {{LinkCardInternal

| |

| |url=:File:Calibration of Credence Levels - Handout.pdf

| |

| |title=AOT and Growth Mindset Handout

| |

| |description=Worksheet for the AOT and growth mindset activities.}}

| |

| {{LinkCardInternal

| |

| |url=:File:Test Your Knowledge Survey.pdf

| |

| |title="Test Your Knowledge" Handout

| |

| |description=An optional handout to further test your students' calibration.}}

| |

| <br />

| |

| | |

| |-|Readings and Assignments=

| |

| | |

| {{LinkCard

| |

| |url=https://drive.google.com/file/d/1UrN8GPlES9ra7_uI5VwdRQ-p98AL_des/view?usp=drivesdk

| |

| |title=How to Be Less Terrible at Predicting the Future

| |

| |description=Freakonomics podcast about superforecasters and calibration of credence levels.}}

| |

| {{LinkCardInternal

| |

| |url=:File:If You Say Something Is "Likely," How Likely Do People Think It Is - Mauboussin, Maubossin.pdf

| |

| |title=If You Say Something is "Likely," How Likely Do People Think It Is?

| |

| |description=Article on words of estimative probability.}}

| |

| <br />

| |

| | |

| </tabber>

| |

| | |

| == Recommended Outline ==

| |

| | |

| === Before Class ===

| |

| | |

| Prepare for the for the [[#Future Predictions (Resolution Part 1)|future predictions activity]] by making copies of the [[#Useful Resources|discussion slides and Google Form]]. Also make sure to print [[:File:Calibration of Credence Levels - Handout.pdf|the worksheet]] for the [[#Actively Open-minded Thinking and Growth Mindset Surveys|actively open-minded thinking and growth mindset surveys]].

| |

| | |

| === During Class ===

| |

| | |

| {| class="wikitable" style="margin-left: 0px; margin-right: auto;"

| |

| |5 Minutes

| |

| |Introduce the lesson and go over the plan for the day. Make sure people have groups, spokespeople, etc.

| |

| |-

| |

| |6 Minutes

| |

| |[[#Future Predictions Resolution|Resolve the future predictions activity]] that we [[3.1 Probabilistic Reasoning#Future Predictions|started last lesson]].

| |

| |-

| |

| |18 Minutes

| |

| |Go through the [[#Concept Review|concept review questions]].

| |

| |-

| |

| |18 Minutes

| |

| |Do the [[#Actively Open-minded Thinking and Growth Mindset Surveys|actively open-minded thinking and growth mindset surveys]].

| |

| |-

| |

| |15 Minutes

| |

| |If you have time remaining, have the students do the [[#Self-calibration Activity|self-calibration activity]].

| |

| |}

| |

| | |

| === After Class ===

| |

| | |

| If you have multiple sections, compile the results from the [[#Future Predictions Resolution|future predictions]] activity. Do the same for the [[#Actively Open-minded Thinking and Growth Mindset Surveys|actively open-minded thinking and growth mindset surveys]].

| |

| | |

| == Lesson Content ==

| |

| | |

| === Future Predictions Resolution ===

| |

| | |

| It's now time to find out how well calibrated our students are! This builds upon the activity at the end of the [[3.1 Probabilistic Reasoning#Future Predictions|last lesson]]. This will give the students hands-on experience with calibrating their credence levels by having them reflect on their own predictions.

| |

| | |

| ==== Instructions ====

| |

| | |

| {| class="wikitable" style="margin-left: 0px; margin-right: auto;"

| |

| |1 Minutes

| |

| |Remind the students of the predictions they made in the last section. Have them take out the handouts and/or photos they took so that they have all of their predictions on hand.

| |

| |-

| |

| |2 Minutes

| |

| |Show the Google Form you made prior to class. There should be one question for each credence bracket (five in total). For each bracket, have the students fill in whether the prediction they made came true or not. Make sure to record the results of each form.

| |

| |-

| |

| |3 Minutes

| |

| |Reveal the form results to the students. Ask them to share some of the predictions they've made and if they have any takeaways for how they could improve their calibration going forwards.

| |

| |}

| |

| | |

| === Concept Review ===

| |

| | |

| ==== Instructions ====

| |

| | |

| {| class="wikitable" style="margin-left: 0px; margin-right: auto;"

| |

| |4 Minutes

| |

| |[[#Summary Discussion|Summary Discussion]]

| |

| |-

| |

| |4 Minutes

| |

| |[[#Question 1|Question 1]]

| |

| |-

| |

| |4 Minutes

| |

| |[[#Question 2|Question 2]]

| |

| |-

| |

| |4 Minutes

| |

| |[[#Question 3|Question 3]]

| |

| |-

| |

| |2 Minutes

| |

| |[[#Question 4|Question 4]]

| |

| |}

| |

| | |

| ==== Summary Discussion ====

| |

| | |

| In your small group explain the ideas of calibration and overconfidence using language that would help a friend or family member understand the concept.

| |

| | |

| ==== Question 1 ====

| |

| | |

| [[File:Credence Level Weather Doctors.png|thumb|Weather forecaster and physician calibration curves.]]

| |

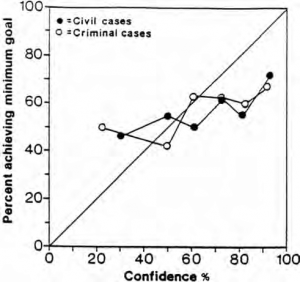

| The figure shows calibration curves for weather forecasters predicting precipitation and physicians diagnosing cases of pneumonia. Notice that the weather forecasters appear to be much better calibrated. However, at the higher probability end, there's still a deviation between the predictions the weather forecasters made and the actual chance of rain. What does that mean in terms of how forecasters are calibrated? How might they further improve their calibration? [[:File:When Overconfidence Becomes a Capital Offense - Plous.pdf|(Source)]]

| |

| {{BoxAnswer|When there's a high chance of rain (about 80%+), the forecasters tended to be somewhat more confident that it would rain than they should have been. With this in mind, they should be wary when they're giving predictions with a high credence level. To be truly calibrated, they shouldn't be making predictions of rain with 100% confidence.}}

| |

| ==== Question 2 ====

| |

| | |

| How might the physicians improve their calibration?

| |

| {{BoxAnswer|The physicians seem to be very poorly calibrated. The clearly have a lot of space to improve. One obvious way is to note that none of their predicted pneumonia cases had greater than a 15% chance of actually being true. The physicians could consider this statistic and use it as a ceiling for their calibration levels until they have access to better methods of prediction.}} | |

| ==== Question 3 ====

| |

| | |

| The table below shows the calibration of predictions for one of FiveThirtyEight's 2016 primary elections models, which forecasts the probability of candidates winning their state primary or caucus.

| |

| | |

| {| class="wikitable" style="margin-left: auto; margin-right: auto;"

| |

| !Candidate's Chance of Winning

| |

| !Number of Candidates with that Win Chance

| |

| !Number of Candidates Predicted to Win

| |

| !Number of Candidates Who Actually Won

| |

| |-

| |

| |95-100%

| |

| |31

| |

| |30.5

| |

| |30

| |

| |-

| |

| |75-94%

| |

| |15

| |

| |12.5

| |

| |13

| |

| |-

| |

| |50-74%

| |

| |11

| |

| |6.9

| |

| |9

| |

| |-

| |

| |25-49%

| |

| |12

| |

| |4.0

| |

| |2

| |

| |-

| |

| |5-24%

| |

| |22

| |

| |2.4

| |

| |1

| |

| |-

| |

| |0-4%

| |

| |93

| |

| |0.9

| |

| |1

| |

| |}

| |

| {{BoxCaution|Students are often confused about how to read this table. Using the first row as an example, 31 candidates had been predicted to win with between 95% and 100% confidence. Based on these estimates, about 30.5 should have won and there were 30 actual winners. Encourage students to use a calculator to compute the percentages.}}

| |

| {{Line}}

| |

| Are the predictions well-calibrated? How do you know?

| |

| {{BoxAnswer|The win probability range (credence level) should accurately reflect the percentage of candidates in that range who actually won. By dividing the actual number of winners by the number of forecasts, the ratio should fall within the quoted percentage range. For example, they are perhaps slightly underconfident in the 50-74% range, as the actual number of winners is a few more than the predicted number. However, given the small samples and noisiness of the data, they did pretty well.}}

| |

| {{Line}}

| |

| Which "Win Probability Ranges" are best calibrated?

| |

| {{BoxAnswer|95-100%, 75-94%, and 0-4% ranges are all very well calibrated, perhaps because it's easier to make predictions when there is a very strong polling preference.}}

| |

| ==== Question 4 ====

| |

| | |

| [[File:Error Bar Demo.png|thumb]]

| |

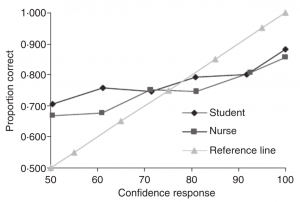

| You want to measure the temperature in Berkeley. You send 100 students around Berkeley to measure the temperature at a given time. You get all the data back, plot it, and calculate the average. You put an error bar on the average value. The error bar below best describes which of the temperature data sets? Is this a trick question?

| |

| {{BoxAnswer|This ''is'' a trick question! It depends on the credence level that you use as the convention for your error bars. Using the error bars for temperature 1 corresponds to about a 30% credence level, temperature 2 is 68%, and temperature 3 is almost 100%.}}

| |

| === Actively Open-minded Thinking and Growth Mindset Surveys ===

| |

| | |

| The last activity is a lead-in to the plenary session. Students will fill out two surveys. The first is the actively open-minded thinking (AOT) and the second is the growth mindset survey. In the PlayPosit video, Professor Gopnik talked about the concept of "superforecasters". These people scored highly on the actively open-minded thinking and growth mindset surveys, and we want students to reflect on these concepts. The main reason for asking these questions is pedagogical and to support self-reflection and metacognition, not for grading. Professor Gopnik will talk more about them in the plenary.

| |

| {{LinkCardInternal

| |

| |url=:File:Calibration of Credence Levels - Handout.pdf

| |

| |title=AOT and Growth Mindset Handout

| |

| |description=Worksheet for the AOT and growth mindset activities.}}

| |

| ==== Instructions ====

| |

| | |

| {| class="wikitable" style="margin-left: 0px; margin-right: auto;"

| |

| |6 Minutes

| |

| |Have the students fill out both surveys on the [[:File:Calibration of Credence Levels - Handout.pdf|handout]].

| |

| |-

| |

| |2 Minutes

| |

| |Show the students [[#Score Calculation|how to calculate their scores]]. This is already listed on the handout, but it's worth making sure they know what they're doing.

| |

| |-

| |

| |10 Minutes

| |

| |Have the class answer the [[#Mindset Discussion Questions|post-survey discussion questions]].

| |

| |}

| |

| | |

| ==== Score Calculation ====

| |

| | |

| ===== First Survey =====

| |

| | |

| For the first section, the 7-item Actively Open-Minded Thinking Scale developed by Jon Baron, you simply reverse score items 4 and 5 by subtracting each answer from the number 6 (individually), then take the average of all items including those two reverse scores (divide by 7). This yields an overall score for Actively Open-Minded Thinking.

| |

| {{BoxCaution|Since this is a self-report scale, it is influenced by norms and not just actual cognitive behavior, but instructors can use it to discuss the meaning of being actively open-minded and get students to discuss why it was one of the best predictors of performance on the geopolitical forecasting tournament. If we can move student cognitive norms and values to be just a bit more actively open-minded, that's immensely valuable.}}

| |

| ===== Second Survey =====

| |

| | |

| For the second section, which is about Carol Dweck's growth mindset, students should reverse score the first two items (1 and 2 should be subtracted from the number 6 individually), add those scores to 3 and 4, and then take the average of all four items (divide by 4). This yields their growth mindset score.

| |

| {{BoxCaution|A growth mindset means a greater sense of optimism and agency in one's ability to improve; the converse is an "entity" mindset, where you think intelligence and ability are set so that effort will not help very much. Unsurprisingly, those who think effort won't help much tend to put in less effort and as a consequence improve less than those who believe improvement is possible. instructors might want to connect this idea to scientific optimism, which they'll have learned about the previous week.}}

| |

| === Self-calibration Activity ===

| |

| {{BoxTip|This activity is '''optional'''. The benefit of using it is that it's the only time the students get an actual score telling them how well calibrated they are. The downside of using this activity is that the students have already done a ''lot'' of credence level surveys and are probably sick of it.}}

| |

| {{LinkCardInternal

| |

| |url=:File:Test Your Knowledge Survey.pdf

| |

| |title="Test Your Knowledge" Handout

| |

| |description=An optional handout to further test your students' calibration.}}

| |

| ==== Instructions ====

| |

| | |

| {| class="wikitable" style="margin-left: 0px; margin-right: auto;"

| |

| |5 Minutes

| |

| |For this activity, give the students the link to the [[:File:Test Your Knowledge Survey.pdf|Test Your Knowledge Survey]] and give them a chance to answer all the questions.

| |

| |-

| |

| |5 Minutes

| |

| |Then give the students [[#Self-calibration Questions and Answers|the answers]] and have them grade their own survey.

| |

| |-

| |

| |5 Minutes

| |

| |After they have gotten a chance to grade their survey, have them calculate their score % correct (number of questions right divided by 10) and average confidence level (sum of confidence levels across items divided by 10). Students should compare those results. If their average confidence is lower than their score (% correct), they were underconfident. If their average confidence is higher than their score (% correct), they were overconfident. After students have gotten their two numbers have them indicate in the zoom poll (below) how well they were calibrated.

| |

| |}

| |

| | |

| ==== Self-calibration Questions and Answers ====

| |

| | |

| # Which is nearer to London? New York or {{Correct|Moscow}}?

| |

| # Who "discovered" New Zealand? Captain Cook or {{Correct|Abel Tasman}}?

| |

| # When did the People's Republic of China join the UN? {{Correct|1971}} or 1972?

| |

| # Which is the second-largest country in South America by land area? {{Correct|Argentina}} or Peru?

| |

| # Which is longer? Panama Canal or {{Correct|Suez Canal}}?

| |

| # Which does Japan produce more of, by weight? {{Correct|Rice}} or wheat?

| |

| # Which was formed first? Southeast The Asia Treaty Organization or the {{Correct|North Atlantic Treaty Organization}}?

| |

| # In what year did Saul Perlmutter win a Nobel Prize in Physics? 2010 or {{Correct|2011}}?

| |

| # Which contains more protein per unit weight? {{Correct|Steak (beef)}} or eggs?

| |

| # Which is larger? The Caspian Sea or the {{Correct|Black Sea}}?<!-- == Overflow ==

| |

| | |

| <div class="toccolours mw-collapsible mw-collapsed" style="overflow:auto;">

| |

| <div style="font-weight:bold;line-height:1.6;">Extra content that's not currently part of the official lesson plan.</div>

| |

| <div class="mw-collapsible-content">

| |

| | |

| === Changemaker Actively Open-minded Thinking Discussion Questions ===

| |

| | |

| # {{Changemaker|In section 12, Alex discusses the differences between an intrapreneur and an entrepreneur. Assign credence levels to each of the following differences to determine if you are better suited to be an intrapreneur or an entrepreneur:}}

| |

| ## {{Changemaker|I can put the company first before myself.}}

| |

| ## {{Changemaker|I have low risk tolerance and prefer stability}}

| |

| ## {{Changemaker|I enjoy the possibility of effecting change at a large scale }}

| |

| ## {{Changemaker|I prefer to have more resources at my disposal, even if that means there can be more pushback from senior management. }}

| |

| {{Changemaker|If your credence levels were quite high, what might it tell you about your ability to be an intrapreneur as opposed to an entrepreneur?}}

| |

| # {{Changemaker|Professor Steven Weber discusses growth mindset and how "Most of the setbacks come from inside, and you decide that what you're doing now isn't really the right way to go, or isn't really what you wanna do. And you make a change". How might this be different from the scientific optimism that we discussed earlier in the semester?}}

| |

| | |

| </div></div> --></restricted>{{NavCard|prev=3.1 Probabilistic Reasoning|next=4.1 Signal and Noise}}

| |

| [[Category:Lesson plans]] | | [[Category:Lesson plans]] |