|

|

| (24 intermediate revisions by the same user not shown) |

| Line 42: |

Line 42: |

| |-|Definitions= | | |-|Definitions= |

|

| |

|

| <!-- Definitions must be written with the Definition and Subdefinition templates. The first Definition should have the "first=yes" flag at the end. --> | | <!-- Definitions must be written with the Definition and Subdefinition templates. --> |

| {{Definition|Confidence Interval|A range of values within which we believe the true value lies, with some credence level.|first=yes}} | | {{Definition |

| {{BoxTip|Confidence interval, when describing an instrumental measurement, corresponds to the statistical uncertainty.}} | | |Confidence Interval |

| {{BoxCaution|When one presents a scientific measurement, they are usually not presenting a ''specific'' value. They're presenting a range that the value is within and the likelihood that the true value is within that range.}} | | |A range of values within which we believe the true value lies, with some credence level. |

| {{BoxCaution|Different fields have different standards for confidence intervals. Physicists typically choose confidence intervals within which they are 68% sure the true value lies. Psychologists typically use 95%.}} | | |comments= |

| {{BoxCaution|There are other related terms like "standard deviation," "σ," and "standard error." Try to avoid this jargon. If students ask about these terms then discuss it outside of class and be mindful of students that haven't had a statistics class.}} | | {{BoxTip |

| {{Definition|Error Bars|A visual representation of the confidence interval on a graph.}} | | |Confidence interval, when describing an instrumental measurement, corresponds to the statistical uncertainty. |

| {{BoxTip|Error bars are typically drawn around a data point which is often the middle of the confidence interval.}} | | }} |

| {{Definition|Actively Open-minded Thinking (AOT)|A thinking style which emphasizes good reasoning independently of one's own beliefs by looking at issues from multiple perspectives, actively searching out ideas on both sides. It predicts more accurate calibration as well as the ability to evaluate argument quality objectively.}} | | {{BoxCaution |

| {{Definition|Growth Mindset|A mindset where people believe "intelligence can be developed" and their abilities can be enhanced through learning. This ''also'' predicts more accurate calibration of credence levels.}} | | |When one presents a scientific measurement, they are usually not presenting a ''specific'' value. They're presenting a range that the value is within and the likelihood that the true value is within that range. |

| <br />

| | }} |

| | {{BoxCaution |

| | |Different fields have different standards for confidence intervals. Physicists typically choose confidence intervals within which they are 68% sure the true value lies. Psychologists typically use 95%.}} |

| | {{BoxCaution |

| | |There are other related terms like "standard deviation," "<math>\sigma</math>," and "standard error." Try to avoid this jargon. If students ask about these terms then discuss it outside of class and be mindful of students that haven't had a statistics class.}} |

| | }} |

| | |

| | {{Definition |

| | |Error Bars |

| | |A visual representation of the confidence interval on a graph. |

| | |comments= |

| | {{BoxTip |

| | |Error bars are typically drawn around a data point which is often the middle of the confidence interval. |

| | }} |

| | }} |

| | |

| | {{Definition |

| | |Actively Open-minded Thinking (AOT)|A thinking style which emphasizes good reasoning independently of one's own beliefs by looking at issues from multiple perspectives, actively searching out ideas on both sides. It predicts more accurate calibration as well as the ability to evaluate argument quality objectively. |

| | }} |

| | |

| | {{Definition |

| | |Growth Mindset |

| | |A mindset where people believe "intelligence can be developed" and their abilities can be enhanced through learning. This ''also'' predicts more accurate calibration of credence levels. |

| | }} |

|

| |

|

| |-|Examples= | | |-|Examples= |

|

| |

|

| <!-- Example formatting is still experimental. --> | | <!-- Examples must be written with the Example template. --> |

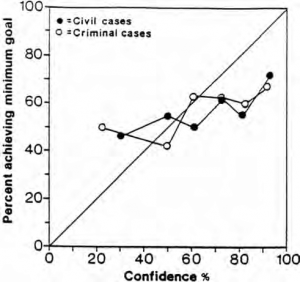

| {{Example|Lawyer Calibration|[[File:Credence Level Lawyers.png|thumb|Lawyer calibration curve.]] | | {{Example |

| Lawyers have a wide range of predictions (20%-100%) for how likely they are to win any given case. However, the actual results show a much narrower band (40%-70%). Really, the outcome is more of a toss-up. [[:File:Insightful or Wishful- Lawyers' Ability to Predice Case Outcomes - Delahunty, Granhag, Hartwig, Loftus.pdf|(Source)]]}} | | |Lawyer Calibration |

| {{Line}} | | |[[File:Credence Level Lawyers.png|thumb|Lawyer calibration curve.]] |

| '''Nurse Calibration'''

| | Lawyers have a wide range of predictions (20%-100%) for how likely they are to win any given case. However, the actual results show a much narrower band (40%-70%). Really, the outcome is more of a toss-up. [[:File:Insightful or Wishful- Lawyers' Ability to Predice Case Outcomes - Delahunty, Granhag, Hartwig, Loftus.pdf|(Source)]] |

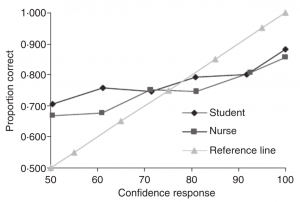

| [[File:Credence Level Nurses.png|thumb|Nurse calibration curve.]] | | }} |

| : Calibration curves are shown for experienced nurses as well as students training to become nurses. In both cases, they appear to be overconfident when outcomes are more likely and underconfident when outcomes are less likely. The nurses did not seem to become better calibrated with time. [[:File:Nurses' Risk Assessment Judgements; A Confidence Calibration Study - Yang, Thompson.pdf|(Source)]]

| | |

| {{BoxCaution|title=Note|The confidence values are all greater than 50%. Any prediction whose confidence is less than 50% can be rephrased as a prediction for the opposite with a confidence greater than 50%. (e.g. I'm 30% confident it will rain tomorrow means I think it will ''not'' rain tomorrow with 70% confidence.)}} | | {{Example |

| {{Line}} | | |Nurse Calibration |

| '''Weather Forecaster Calibration'''

| | |[[File:Credence Level Nurses.png|thumb|Nurse calibration curve.]] |

| : It is possible (and expected) for singular election forecasts to turn out to be wrong some of the time. It is fine as long as the predictions within that credence range are overall well calibrated. It is also impossible to calibrate the credence of a singular prediction, it is only possible for an aggregate of predictions. [[:File:The Polls Weren't Great. But That's Pretty Normal. - Silver.pdf|(Source)]]

| | Calibration curves are shown for experienced nurses as well as students training to become nurses. In both cases, they appear to be overconfident when outcomes are more likely and underconfident when outcomes are less likely. The nurses did not seem to become better calibrated with time. [[:File:Nurses' Risk Assessment Judgements; A Confidence Calibration Study - Yang, Thompson.pdf|(Source)]] |

| '''Vague Verbiage and Words of Estimative Probability'''

| | |comments= |

| {{LinkCard | | {{BoxCaution |

| |url=https://en.wikipedia.org/wiki/Words_of_estimative_probability | | |title=Note |

| |title=Words of Estimative Probability | | |The confidence values are all greater than 50%. Any prediction whose confidence is less than 50% can be rephrased as a prediction for the opposite with a confidence greater than 50%. (e.g. I'm 30% confident it will rain tomorrow means I think it will ''not'' rain tomorrow with 70% confidence.) |

| |description=Wikipedia article on words of estimative probability.}} | | }} |

| | }} |

| | |

| | {{Example |

| | |Weather Forecaster Calibration |

| | |It is possible (and expected) for singular election forecasts to turn out to be wrong some of the time. It is fine as long as the predictions within that credence range are overall well calibrated. It is also impossible to calibrate the credence of a singular prediction, it is only possible for an aggregate of predictions. [[:File:The Polls Weren't Great. But That's Pretty Normal. - Silver.pdf|(Source)]] |

| | }} |

| | |

| | {{Example |

| | |Vague Verbiage and Words of Estimative Probability |

| | |Words of estimative probability are vague terms used by intelligence analysts to convey the likelihood of an event without explicitly stating the associated probability. |

| | |links= |

| | {{LinkCard |

| | |url=https://en.wikipedia.org/wiki/Words_of_estimative_probability |

| | |title=Words of Estimative Probability |

| | |description=Wikipedia article on words of estimative probability. |

| | }} |

| | }} |

| <br /> | | <br /> |

|

| |

|

| |-|Common Misconceptions= | | |-|Common Misconceptions= |

|

| |

|

| <!-- Misconceptions must be written with the Misconception template. The first Misconception should have the "first=yes" flag at the end. --> | | <!-- Misconceptions must be written with the Misconception template. --> |

| {{Misconception|He seems super confident, and she said she was only 85% sure, so we should trust him over her.|It is more important to have the self-awareness of how often they are wrong, than to always insist they are right. If possible, use the outcomes of their past predictions to evaluate the calibration of their confidence before placing trust in them.}} | | {{Misconception|He seems super confident, and she said she was only 85% sure, so we should trust him over her.|It is more important to have the self-awareness of how often they are wrong, than to always insist they are right. If possible, use the outcomes of their past predictions to evaluate the calibration of their confidence before placing trust in them.}} |

| {{Misconception|Am I well calibrated in this particular prediction?|The question doesn't really make sense. You can't have a calibration for a single prediction. A calibration level is only meaningfully defined over a collection of predictions for which you can see how many came ultimately true.}} | | {{Misconception|Am I well calibrated in this particular prediction?|The question doesn't really make sense. You can't have a calibration for a single prediction. A calibration level is only meaningfully defined over a collection of predictions for which you can see how many came ultimately true.}} |